The Many Layers of Packaging

The packaging gradient, and why PyPI isn't an app store.

Update: I turned this post into a talk. The video from PyBay is here, the slides are available here. The long-cut video from BayPiggies is coming, but the "Extended Edition" slides are here.

One lesson threaded throughout Enterprise Software with Python is that deployment is not the last step of development. The mark of an experienced engineer is to work backwards from deployment, planning and designing for the reality of production environments.

You could learn this the hard way. Or you could come on a journey into what I call the packaging gradient. It's a quick and easy decision tree to figure out what you need to ship. You'll gain a trained eye, and an understanding as to why there seem to be so many conflicting opinions about how to package code.

The first lesson on our adventure is:

Implementation language does not define packaging solutions.

Packaging is all about target environment and deployment experience. Python will be used in examples, but the same decision tree applies to most general-purpose languages.

Python was designed to be cross-platform and runs in countless environments. But don't take this to mean that Python's built-in tools will carry you anywhere you want to go. I can write a mobile app in Python, does it make sense to install it on my phone with pip? As you'll see, a language's built-in tools only scratch the surface.

So, one by one, I'm going to describe some code you want to ship, followed by the simplest acceptable packaging process that provides that repeatable deployment process we crave. We save the most involved solutions for last, right before the short version. Ready? Let's go!

- Prelude: The Humble Script

- The Python Module

- The pure-Python Package

- The Python Package

- Milestone: Outgrowing our roots

- Depending on pre-installed Python

- Depending on a new Python/ecosystem

- Bringing your own Python

- Bringing your own userspace

- Bringing your own kernel

- Bringing your own hardware

- But what about...

- Closing

Prelude: The Humble Script

Everyone's first exposure to Python deployment was something so

innocuous you probably wouldn't remember. You copied a script from

point A to point B. Chances are, whether A and B were separate

directories or computers, your days of "just use cp" didn't last

long.

Because while a single file is the ideal format for copying, it doesn't work when that file has unmet dependencies at the destination.

Even simple scripts end up depending on:

- Python libraries - boltons, requests, NumPy

- Python, the runtime - CPython, PyPy

- System libraries - glibc, zlib, libxml2

- Operating system - Ubuntu, FreeBSD, Windows

So every good packaging adventure always starts with the question:

Where is your code going, and what can we depend on being there?

First, let's look at libraries. Virtually every project these days

begins with library package management, a little pip install. It's

worth a closer look!

The Python Module

Python library code comes in two sizes, module and package, practically corresponding to files and directories on disk. Packages can contain modules and packages, and in some cases can grow to be quite sprawling. The module, being a single file, is much easier to redistribute.

In fact, if a pure-Python module imports nothing but the standard library itself, you have the unique option of being able to distribute it by simply copying the single file into your codebase.

This type of inclusion, known as vendoring, is often glossed over, but bears many advantages. Simple is better than complex. No extra commands or formats, no build, no install. Just copy the code1 and roll.

For examples of libraries doing this, see bottle.py, ashes, schema, and, of course, boltons, which also has an architectural statement on the topic.

The pure-Python Package

Packages are the larger unit of redistributable Python. Packages are

directories of code containing an __init__.py. Provided they contain

only pure-Python modules, they can also be vendored, similar to the

module above. Even very popular packages

like pip itself can be found with vendor, lib,

and packages directories.

Because these packages nest and sprawl, vendoring can lead to

codebases that feel unwieldy. While it may seem awkward to have lib

directories many times larger than your application, it's more common

than some less-experienced devs might expect. That said, having worked

on some very large codebases, I can definitely understand why core

Python developers created other options for distributing Python

libraries.

For libraries that only contain Python code, whether single-file or

multi-file, Python's original built-in solution still works today:

sdists, or "source distributions". This early format has

worked for well over a decade and is still supported by pip and the

Python Package Index (PyPI)2.

The Python Package

Python is a great language, and one which is made all the greater by its power to integrate.

Many libraries contain C, Cython, and other statically-compiled languages that need build tools. If we distribute such code using sdists, installation will trigger a build that will fail without the tools, will take time and resources if it succeeds, and generally involve more intermediary languages and four-letter keywords than Python devs thought should be necessary.

When you have a library that requires compilation, then it's definitely time to look into the wheel format.

Wheels are named after wheels of cheese, found in the proverbial cheese shop. Aptly named, wheels really help get development rolling. Unlike source distributions like sdists, the publisher does all the building, resulting in a system-specific binary.

The install process just decompresses and copies files into place. It's so simple that even pure-Python code gets installed faster when packaged as a wheel instead of an sdist.

Now even when you upload wheels, I still recommend uploading sdists as a fallback solution for those occasions when a wheel won't work. It's simply not possible to prebuild wheels for all configurations in all environments. If you're curious what that means, check out the design rationale behind manylinux1 wheels.

Milestone: Outgrowing our roots

Now, three approaches in, we've hit our first milestone. So far, everything has relied on built-in Python tools. pip, PyPI, the wheel and sdist formats, all of these were designed by developers, for developers, to distribute code and tools to other developers.

In other words:

PyPI is not an app store.

PyPI, pip, wheels, and the underlying setuptools machinations are all designed for libraries. Code for developer reuse.

Going back to our first example, a "script" is more accurately described as a command-line application. Command-line applications can have a Python-savvy audience, so it's not totally unreasonable to host them on PyPI and install them with pip (or pipsi). But understand that we're approaching the limit for a good production and user-facing experience.

So let's get explicit. By default, the built-in packaging tools are designed to depend on:

- A working Python installation

- A network connection, probably to the Internet

- Pre-installed system libraries

- A developer who is willing to sit and watch dependencies recursively download at install-time, and debug version conflicts, build errors, and myriad other issues.

These are fine, and expected for development environments. Professionals are paid to do it, students pay to learn it, and there are even a few oddballs who enjoy this sort of thing.

Going into our next options, notice how we have shifted gears to support applications. Remember that distributing applications is more a function of target platform than of implementation language. This is harder than library distribution because we stop depending on layers of the stack, and the developer who would be there to ensure the setup works.

Depending on pre-installed Python

For our first foray into application distribution, we're going to maintain the assumption that Python exists in the target environment. This isn't the wildest assumption, CPython 2 is available on virtually every Linux and Mac machine.

Taking Python for granted, we can turn to bundling up all of the

Python libraries on which our code depends. We want a single

executable file, the kind that you can double click or run by

prefixing with a ./, anywhere on a Python-enabled

host. The PEX format gets us exactly this.

The PEX, or Python EXecutable, is a carefully-constructed ZIP archive, with just a hint of bootstrapping. PEXs can be built for Linux, Mac, and Windows. Artifacts rely on the system Python, but unlike pip, a PEX does not install itself or otherwise affect system state. It uses mature, standard features of Python, successfully iterating on a broadly-used approach.

A lot can be done with Python and Python libraries alone. If your project follows this approach, PEX is an easy choice. See this 15-minute video for a solid introduction.

Depending on a new Python/ecosystem

Plain old vanilla Python leaving you wanting? That factory-installed system software can leave a lot to be desired. Lucky for us there's an upgrade well within grasp.

Anaconda is a Python distribution with expanded support for distributing libraries and applications. It's cross-platform, and has supported binary packages since before the wheel. Anaconda packages and ships system libraries like libxml2, as well as applications like PostgreSQL, which fall outside the purview of default Python packaging tools. That's because while Anaconda might seem like an innocent Python distribution from the outside, internally Anaconda blends in characteristics of a full-blown operating system, complete with its own package manager, conda.

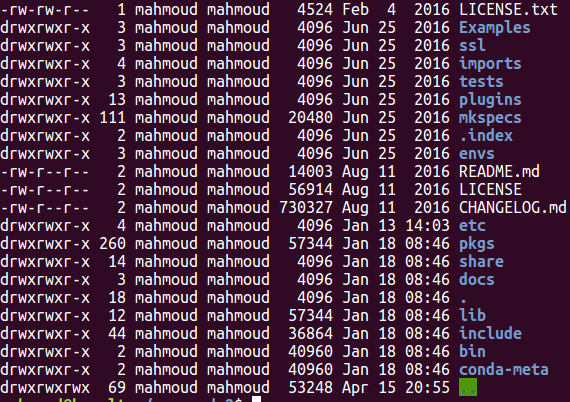

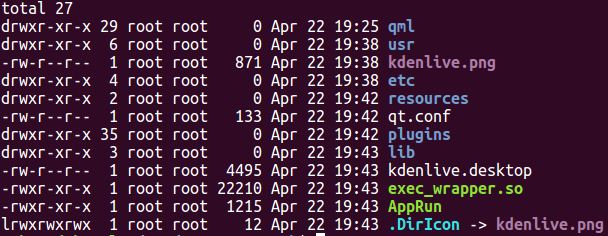

If you look inside of an Anaconda installation, or at the screenshot

below, you'll find something that looks a lot like

a root Linux filesystem (lib, bin, include,

etc), with some extra Anaconda-specific directories.

What's remarkable is that the underlying operating system can be Windows, Mac, or basically any flavor of Linux. Just like that, Anaconda unassumingly blends Python libraries and system libraries, convenience and power, development and data science. And it does it all by using features built into Python and target operating systems.

Consider that the list of cross-platform and language-agnostic package managers includes only Steam, Nix, and pkgsrc, and you can start to understand why conda is often misunderstood. Adding onto that, conda is adding features fast. For instance, conda is the first Python-centric package manager to do its dependency resolution up front (using a SAT solver), unlike pip. More recently, conda 4.3 fulfilled the wishes of many by matching apt and yum with transactional package installation. Now conda matches operating system package managers in critical technical respects, except the wide-open social components of anaconda.org make it even easier to use than, say PPAs.

In short, Anaconda makes a compelling and effective case, both as a development environment comparable to pip + virtualenv, and even as part of the equation in production server environments. Python is lucky to host to such a rare breed.

Bringing your own Python

Can you imagine deploying to an environment without Python? It's a hellish scenario, I know. Luckily, your code can still bring your own, and it's ice cold. Freezing, in fact.

When I wrote my first Python program, I naturally shared news of the accomplishment with my parents, who naturally wanted to experience this taste of The Future firsthand.

Of course all I had a .py file I wrote on Knoppix, and they were halfway around the world on a Windows 2000 machine. Luckily, this new software called cx_Freeze was just announced a couple months earlier. Unluckily, no one told me, and the better part of a decade would pass before I learned how to use it.

Fifteen years later, the process has evolved, but retained the same general shape. Dropbox, EVE Online, Civilization IV, kivy, and countless other applications and frameworks rely on freezing to ship applications, generally to personal computing devices. Interpreter, libraries, and application logic, all rolled into an independent artifact.

These days the list of open-source tools has expanded beyond cx_Freeze to include PyInstaller, osnap, bbFreeze, py2exe, py2app, pynsist, nuitka, and more. There is even a conda-native option called constructor. A partial feature matrix can be found here.

Most of these systems give you some latitude to determine exactly how independent an executable to generate. Frozen artifacts almost always ends up depending somewhat on the host operating system. See this py2exe tutorial discussion of Windows system libraries for a taste of the fun.

If you're wondering about the chilly moniker, freezers owe their name to their reliance on the "frozen module" functionality built into Python. It's sparsely documented, but basically Python code is precompiled into bytecode and frozen into the interpreter. As of Python 3.3, Python's import system was ported from C to a frozen pure-Python implementation.

Servers ride the bus

Freezing tends to be targeted more toward client software. They're great for GUIs and CLI applications run by a single user on a single machine at a time. When it comes to deploying server software bundled with its own Python, there is a very notable alternative: the Omnibus.

Omnibus builds "full-stack" installers designed to deploy applications to servers. It supports RedHat and Debian-based Linux distros, as well as Mac and Windows. A few years back, DataDog saw the light and made the switch for their Python-based agent. GitLab's on-premise solution is perhaps the largest open-source usage, and has been a joy to install and upgrade.

Unlike our multitude of freezers, Omnibus is uniquely elegant and mature. No other system has natively shipped multi-component/multi-service packages as sleekly for as long.

Bringing your own userspace

Probably the newest and fastest-growing class of solution has actually been a long time coming. You may have heard it referenced by its buzzword: containerization, sometimes crudely described as "lightweight virtualization".

Better descriptions exist, but the important part is this: Unlike other options so far, these packages establish a firm border between their dependencies and the libraries on the host system. This is a huge win for environmental independence and deployment repeatability.

In our own image

Let's illustrate with one of the simplest and most mature implementations, AppImage.

Since 2004, the aptly-named AppImage (and its predecessor klik) have been providing distro-agnostic, installation-free application distribution to Linux end users, without requiring root or touching the underlying operating system. AppImages only rely on the kernel and CPU architecture.

An AppImage is perhaps the most aptly-named solution in this whole post. It is literally an ISO9660 image containing an entrypoint executable, plus a snapshot of a filesystem comprising a userspace, full of support libraries and other dependencies. Looking inside a mounted Kdenlive image, it's easy to recognize the familiar structure of a Unix filesystem:

Dozens of headlining Linux applications ship like this now. Download the AppImage, make it executable, double-click, and voila.

If you're reading this on a Mac, you've probably had a similar experience. This is one of those rare cases where there's some consensus: Apple was one of the pioneers in image-based deployments, with DMGs and Bundles.

An image by any other name

No class of formats would be complete without a war. AppImage inspired the Flatpak format, which was adopted by RedHat/Fedora, but was of course insufficient for Canonical/Ubuntu, who were also targeting mobile, and created Snappy. A shiny update to our deb-rpm split tradition.

Both of these formats introduce more features, as well as more complexity and dependence on the operating system. Both Snaps and Flatpaks expect the host to support their runtime, which can include dbus, a systemd user session, and more. A lot of work is put into increased namespacing to isolate running applications into separate sandboxes.

I haven't actually seen these formats used for deploying server software. Flatpak might never support servers, Snappy is trying, but personally, I would really like to hear about or experiment with server-oriented AppImages.

The whale in the room

Some call the technology sphere a marketplace of ideas, and that metaphor is certainly felt in this case. Whether you've heard good things or bad, we can all agree Docker is the format sold the hardest. What else would you do when you've got $180 million of VC breathing down your neck.

Docker lets you make an application as self-contained as AppImage, but exceeds even Snapcraft and Flatpak in the assumptions it makes. Images are managed and run by yet another service with a lot of capabilities and tightly coupled components.

Docker's packaging abstraction reflects this complexity. Take for

instance how Docker applications default to running as root, despite

their documentation recommending against this. Default

root is particularly unfriendly because namespacing is still not a

reliable guard against malicious actors attacking the host

system. Root inside the container is root outside the container. Always

check the CVEs. The Docker

security documentation also includes some good,

frank discussion of what one is getting into.

Checking in with our trendline, so far we have been shipping larger larger, more-inclusive artifacts for more independent, reliable deployments. Some container systems present us with our first clear departure from this pattern. We no longer have a single executable that runs or installs our code. Technically we have a self-contained application, but we're also back to requiring an interpreter other than the OS and CPU.

It's not hard to imagine instances where the complexity of a runtime can overrun the advantages of self-containment. To quote Jessie Frazelle's blog post again, "Complexity == Bugs". This dynamic leads some to skip straight to our next option, but as AppImage simply demonstrates, this is not an impeachment of all image-based approaches.

Bringing your own kernel

Now we're really packing heavy. If having your Python code, libraries, runtime, and necessary system libraries isn't enough, you can add one more piece of machinery: the operating system kernel itself.

While this type of distribution never really caught on for consumers, there is a rich ecosystem of tools and formats for VM-based server deployment, from Vagrant to AMIs to OpenStack. The whole dang cloud.

Like our more complex container examples above, the images used to run virtual machines are not runnable executables, and require a mediating runtime, called a hypervisor. These days hypervisor machinery is very mature, and may even come standard with the operating system, as is the case with Windows and Mac. The images themselves come in a few formats, all of which are mature and dependable, if large. Size and build time may be the only deterrent for smaller projects prioritizing development time. Thanks to years of kernel and processor advancement, virtualization is not as slow as many developers would assume. If you can get your software shipped faster on images, then I say go for it.

Larger organizations save a lot from even small reductions to deployment and runtime overhead, but have to balance that against half a dozen other concerns worthy of a much longer discussion elsewhere.

Bringing your own hardware

In a software-driven Internet obsessed with lighter and lighter weight solutions, it can be easy to forget that a lot of software is literally packaged.

If your application calls for it, you can absolutely slap it on a rackable server, Raspberry Pi, or even a micropython and physically ship it. It may seem absurd at first, but hardware is the most sensible option for countless cases. And not limited to just consumer and IoT use cases, either. Especially where infrastructure and security are concerned, hardware is made to fit software like a glove, and can minimize exposure for all parties.

But what about...

Before concluding, there are some usual suspects that may be conspicuously absent, depending on how long you've been packaging code.

OS packages

Where do OS packages like deb and RPM fit into all of this? They can fit anywhere, really. If you are very sure what operating system(s) you're targeting, these packaging systems can be powerful tools for distributing and installing code. There are reasons beyond popularity that almost all production container and VM workflows rely on OS package managers. They are mature, robust, and capable of doing dependency resolution, transactional installs, and custom uninstall logic. Even systems as powerful as Omnibus target OS packages.

In ESP's packaging segment, I touch on how we leveraged RPMs as a delivery mechanism for Python services in PayPal's production RHEL environment. One detail, that would have been minor and confusing in that context, but should make sense to readers now, is that PayPal didn't use the vanilla operating system setup. Instead, all machines used a separate rpmdb and install path for PayPal-specific packages, maintaining a clear divide between application and base system.

virtualenv

Where do virtualenvs fit into all of this? Virtualenvs are indispensible for many Python development workflows, but I discourage direct use of virtualenvs for deployment. Virtualenvs can be a useful packaging primitive, but they need additional machinery to become a complete solution. The dh-virtualenv package demonstrates this well for deb packaging, but you can also make a virtualenv in an RPM post-install step, or by virtue of using an installer like osnap. The key is that the artifact and its install process should be self-contained, minimizing the risk of partial installs.

This isn't virtualenv-specific, but lest it go unsaid, do not pip-install things, especially from the Internet, during production deploys. Scroll up and read about PEX.

Security

The further down the gradient you come, the harder it gets to update components of your package. Everything is more tightly bound together. This doesn't necessarily mean that it's harder to update in general, but it is still a consideration, when for years the approach has been to have system administrators and other technicians handle certain kinds of infrastructure updates.

For example, if a kernel security issue emerges, and you're deploying containers, the host system's kernel can be updated without requiring a new build on behalf of the application. If you deploy VM images, you'll need a new build. Whether or not this dynamic makes one option more secure is still a bit of an old debate, going back to the still-unsettled matter of static versus dynamic linking.

Closing

Packaging in Python has a bit of a reputation for being a bumpy ride. This is mostly a confused side effect of Python's versatility. Once you understand the natural boundaries between each packaging solution, you begin to realize that the varied landscape is a small price Python programmers pay for using the most balanced, flexible language available.

A summary of our lessons along the way:

- Language does not define packaging, environment does. Python is general-purpose, PyPI is not.

- Application packaging must not be confused with library packaging. Python is for both, but pip is for libraries.

- Self-contained artifacts are the key to repeatable deploys.

- Containment is a spectrum, from executable to installer to userspace image to virtual machine image to hardware. "Containers" are not just one thing, let alone the only option.

Now, with map in hand, you can safely navigate the rich terrain. The Python packaging landscape is converging, but don't let that narrow your focus. Every year seems to open new frontiers, challenging existing practices for shipping Python.

-

Don't forget to include respective free software licenses, where applicable. ↩

-

Despite being called the Python Package Index, PyPI does not index packages. PyPI indexes distributions, which can contain one or more packages. For instance, pip installing Pillow allows you to import PIL. Pillow is the distribution, PIL is the package. The Pillow-PIL example also demonstrates how the distribution-package separation enables multiple implementations of the same API. Pillow is a fork of the original PIL package. Still, as most distributions only provide one package, please name your distribution after the package for consistency's sake. ↩